- Strategic Implications of Fine Tuning LLM

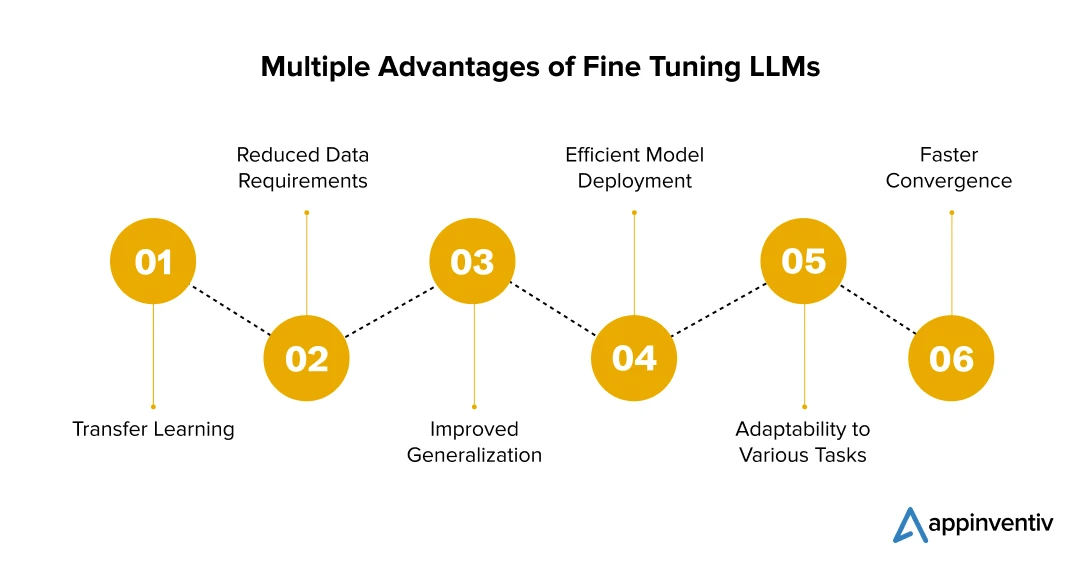

- 1. Transfer Learning

- 2. Reduced Data Requirements

- 3. Improved Generalization

- 4. Efficient Model Deployment

- 5. Adaptability to Various Tasks

- 6. Faster Convergence

- Core Applications of Fine Tuning LLMs

- 1. Enhancing Customer Interactions

- 2. Content Creation and Management

- 3. Business Insights from Unstructured Data

- 4. Personalization at Scale

- 5. Compliance Monitoring and Enforcement

- Methods to Approach Fine Tuning in Large Language Models

- a. Supervised Fine-Tuning

- b. Reinforcement Learning from Human Feedback (RLHF)

- LLM Fine Tuning Best Practices

- Use the Correct Pre-Trained Model

- Set Hyperparameters

- Evaluate Model Performance

- Try Multiple Data Formats

- Gather a Vast High-Quality Dataset

- Fine-Tune Subsets

- Overcoming LLM Fine Tuning Challenges

- Fine Tuning LLM Models in Business - The Way Forward

- FAQs

Over the past year and a half, the natural language processing (NLP) domain has gone through a massive transformation while riding on the popularization of LLMs. The natural language skills which these models are built on have birthed applications that seemed impossible to achieve a few years ago, into existence.

Language learning models (LLMs), with their abilities varying from language translation to sentiment analysis and text generation are pushing the boundaries of what was previously considered unachievable. However, every AI enthusiast knows that building and training such models can be very time-consuming and expensive.

For this exact reason, LLM fine tuning has been gaining prominence among businesses looking to deploy ready-made advanced algorithms to address their specific domains needs or tasks. For ones who are building their processes around NLP and Generative AI, fine tuning large language models comes with the promise to enhance the algorithm’s performance for specialized tasks, significantly broadening its applicability across multiple fields.

With a range of emerging benefits under its collar, fine tuning in large language models is slowly becoming an entrepreneur’s answer to cost-effective artificial intelligence customization. In this article, we will be exploring the multiple facets of the process and its implication on your business, ultimately helping you make the right decision in terms of the investment and its worth.

Strategic Implications of Fine Tuning LLM

AI-pro businesses know how developing an LLM is easier said than done. One cannot just “apply” a foundational model into a giant database of text.

Purely from a technical standpoint, LLMs can be defined as a giant data processing function that needs to be precisely trained to produce a usable model. The textual data should first be translated in numerical datasets through tokenization and vocabulary mapping – post which LLM training starts. A process that requires state-of-the-art hardware, huge amounts of money, and months of development time.

Thankfully, there are a number of organizations like Meta, OpenAI, and Google, that are very proactive when it comes to building LLMs, and with their deep pockets and dedicated research teams they can effortlessly fund development efforts and train useful LLMs.

With a model readily available to be fine-tuned, small and medium scale businesses can fast track their LLM ventures.

The advantages of fine tuning large language models are not simply limited to new use cases exploration (although that is a major benefit), it has several macro and micro level impacts which directly sets businesses apart from competition.

1. Transfer Learning

Fine tuning LLM models utilize the knowledge gathered in the pre-training stage, meaning the model already has an understanding of the syntax, language, and context from a varied dataset. This knowledge, when transferred to a specific task during fine-tuning, saves a lot of computation time, money, and resources compared to training the model from the ground up.

2. Reduced Data Requirements

A fine tuning model often requires much less labeled data when compared to training a model from scratch. The pre-trained model already knows the general language features, so the only thing fine-tuning has to focus on is adapting those features to the target tasks’ specificities. This comes in particularly beneficial in situations where generating or gathering large labeled datasets is fairly expensive or challenging.

3. Improved Generalization

Another one of the key advantages of fine tuning large language models can be seen in how the process enables models to fit well into key tasks or business domains. The pre-trained model, being built on varied linguistic patterns, captures a wide range of generalized language features. Fine-tuning them tailors these features in a way that they meet the target task seamlessly, leading to development of models that performs well on a range of interrelated tasks.

4. Efficient Model Deployment

Fine tuning large language models create products that are more efficient and ready for real-world applications deployment. The pre-training stage captures an understanding of language, which through fine-tuning gets augmented and customization-ready for the model’s business-specific applications. This can lead to development of models that are computationally effective and are well-suited for tasks having very specific requirements.

5. Adaptability to Various Tasks

LLMs, when they get fine-tuned, can be used for a vast range of tasks. Be it sentiment analysis, text question-answering, summarization, or some other natural language processing activity, fine tuning LLM enables a specific pre-trained model to excel in multiple applications – without the need for building task-specific model architectures.

6. Faster Convergence

Training a LLM model from scratch can be extremely expensive and time-consuming on a computational front. Fine tuning models, on the other side, tend to converge faster since the model starts with the absence of weights that capture general language features. This comes handy in events where a quick adaptation to a new task is needed.

Understanding the importance of fine tuning models when it comes to deploying unique LLM-powered AI applications quickly and cost effectively is still something that businesses are exploring. While knowing the glaring benefits it offers makes the case persuasive, businesses would also benefit from knowing the different applications of the process on an industrial level.

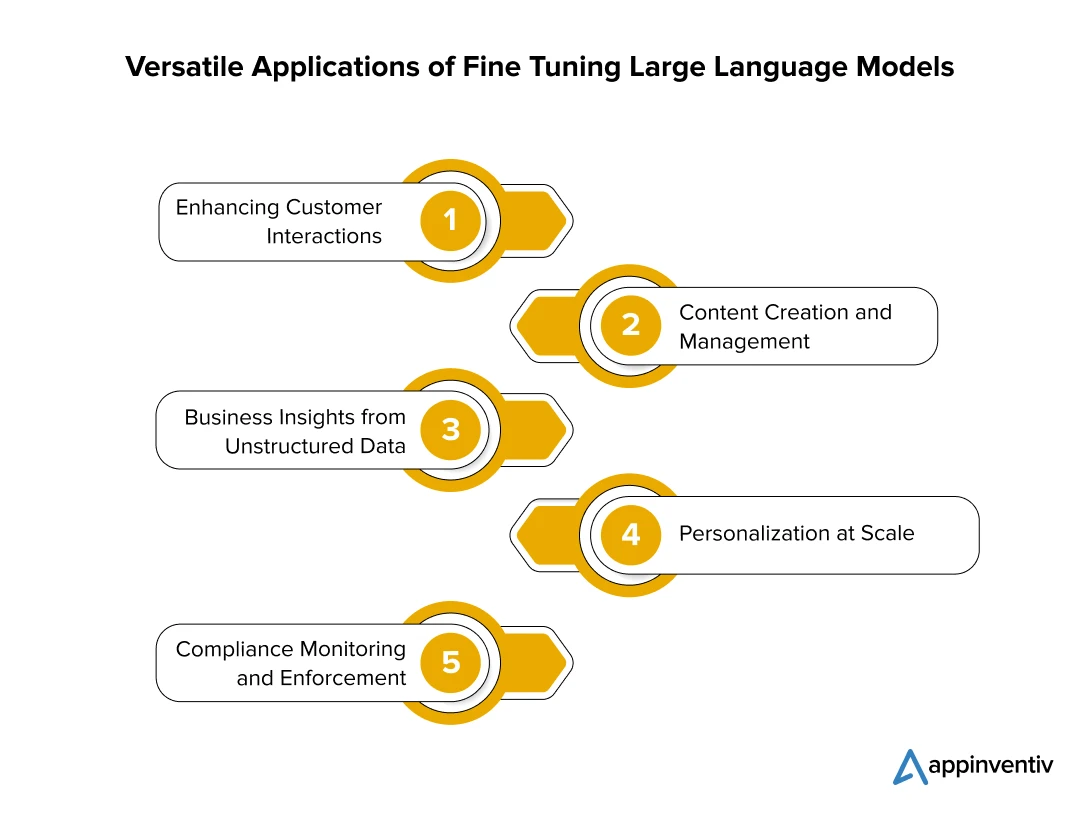

Core Applications of Fine Tuning LLMs

Large Language Models, when fine-tuned, offer unparalleled advantages in various business functions. By customizing these models to understand and generate text that aligns closely with specific industry needs, organizations can achieve higher accuracy, relevancy, and effectiveness in their operations. Here are some primary applications where fine-tuned LLMs are transforming industries:

1. Enhancing Customer Interactions

1. Enhancing Customer Interactions

In today’s customer-centric business environment, personalization is key. Fine-tuned LLMs can be employed to manage customer service interactions, providing responses that are not only quick but also tailored to the individual needs of customers. For industries ranging from retail to banking, this means improved customer satisfaction and loyalty as artificial intelligence handles inquiries with human-like understanding, freeing up human agents to tackle more complex issues.

Example: A telecommunications company uses a fine-tuned LLM to handle routine customer queries and complaints through their online chat service, allowing them to maintain 24/7 support without compromising quality.

2. Content Creation and Management

Marketing departments can leverage one of the prominent use cases for fine-tuned LLMs to generate creative and engaging content that resonates with their target audience. Whether it’s crafting personalized emails, generating product descriptions, or creating engaging blog posts, fine-tuned LLMs ensure that the content is not only original but also aligns with the brand’s voice and compliance requirements.

Example: A travel agency utilizes a fine-tuned LLM to create detailed travel itineraries and guides based on user preferences and past behavior, enhancing user engagement and boosting sales conversions.

3. Business Insights from Unstructured Data

LLMs are adept at processing and analyzing large volumes of unstructured text data – such as customer feedback, social media comments, and product reviews – to extract valuable insights. Fine-tuning allows these models to focus on industry-specific terminology and contexts, providing businesses with actionable intelligence that can inform product development, marketing strategies, and customer service improvements.

Example: A healthcare provider uses a fine-tuned LLM to analyze patient feedback across various platforms to identify common concerns and areas for service improvement.

4. Personalization at Scale

Fine-tuned LLMs can drive personalization efforts across various customer touch points. By understanding individual customer preferences and behaviors, these models can recommend products, customize search results, and even tailor news feeds in real-time.

Example: An e-commerce platform employs a fine-tuned LLM to provide personalized shopping experiences by recommending products based on browsing history and purchase behavior, significantly increasing repeat customer rates.

5. Compliance Monitoring and Enforcement

For industries that are heavily regulated, such as finance and healthcare, ensuring compliance with laws and regulations is critical. Use cases for fine-tuned LLMs can be found in instances where healthcare firms need to monitor communications and operations for potential compliance issues, automatically flagging risks before they become costly violations.

Example: A financial institution integrates a fine-tuned LLM to monitor and analyze customer interactions and internal communications to ensure adherence to financial regulations and prevent fraud.

The ability of LLMs to adapt to specific business needs through fine-tuning not only enhances their effectiveness but also makes them indispensable tools in the digital transformation journey of any industry. By implementing fine-tuned LLMs, businesses can optimize their operations, innovate their services, and deliver exceptional value to their customers, thereby securing a competitive edge in the marketplace.

Since a lot rides on the correct implementation of LLM fine tuning, let us look into the different ways businesses can ask their partner AI development company to fine-tune the models.

Methods to Approach Fine Tuning in Large Language Models

Multiple fine tuning LLM methods can be used for adjusting the model’s features according to a specific requirement. On a high level, however, we can categorize all the methods in two parts: supervised fine-tuning and reinforcement learning from human feedback.

a. Supervised Fine-Tuning

In this approach, the model gets trained on task-based labeled datasets, where every input data point is linked with the correct label or answer. The model then adjusts the parameters to predict labels as accurately as feasible. This method guides the LLM to incorporate its pre-existing knowledge, gathered by pre-training on a massive dataset, to that specific task.

Supervised fine tuning large language models are known to significantly better the model’s performance, making it an efficient method for businesses looking to customize LLMs.

The most commonly applicable supervised LLM fine tuning methods are:

1. Basic Hyperparameter Tuning

It is a straightforward approach which consists of manually modifying the model hyperparameters like the batch size, learning rate, and the number of epochs, until the desired performance is achieved.

The goal here is to find hyperparameters which would allow the models to learn effectively from data while balancing the trade-off among the risk of overfitting and the learning speed. Ultimately, optimal hyperparameters would immensely elevate the model’s performance on a specific task.

2. Transfer Learning

Another one of the LLM fine tuning methods, it is a technique which comes particularly handy when businesses are working with restricted task-specific data. In this method, a model which has been pre-trained on a massive, general dataset gets utilized as a starting point.

The model then gets fine-tuned on the basis of task-based data, enabling it to adapt the pre-existing knowledge in the new task. This significantly lowers the data dependency and training time, while significantly increasing the superior performance compared to training a model from the base up.

3. Multi-Task Learning

Here the LLM gets fine tuned on several linked tasks simultaneously. The idea in this popularly used LLM fine tuning method is to use the commonalities and differences present in the tasks to better the model’s overall performance. The model can then build a more generalized and robust data understanding by learning how to perform multiple tasks together.

This approach also leads to better LLM performance, specifically in cases where the performed tasks are closely knit or when there is restricted data for specific tasks. The model, with its reliance on labeled dataset for every task, makes it a key component of supervised fine tuning model.

4. Few-Shot Learning

The fine-tuning LLM method powers a model in a way that it adapts a new task with very little task-based data. The idea here is to use the knowledge that the model has gained through pre-training to learn from only a few examples of the new activity. This comes in useful when the task-based labeled data is either limited or very expensive.

In this approach, the fine tuned model is given some examples, also known as “shots” during the inference time to learn a new activity. This “few-shot” powered learning helps guide a model’s predictions by offering examples and context directly to the prompt.

5. Task-Specific Fine-Tuning

This fine-tuning process allows the LLM to use its parameters for meeting the needs and complexities of a specific task, thus enhancing the relevance and performance of that specific domain. Task-specific fine-tuning approach is useful when businesses need to optimize a model’s performance for a specific, perfectly-defined task, guaranteeing that the model perfectly generates task-based content with accuracy.

Task-based fine-tuning is often confused with transfer learning, however, the latter is about using the generic features that the model learns, whereas in case of task-specific fine-tuning it is all about adapting the model according to the key requirements of the new event.

b. Reinforcement Learning from Human Feedback (RLHF)

It is a revolutionary set of LLM fine tuning methods which comprise training LLMs through human interactions. By adding in human angle in the learning model, RLHF eases continuous betterments of language models in a way that it produces highly accurate and contextually correct responses.

This model doesn’t just use human evaluators expertise but even enables the LLM to evolve on the basis of real-world feedback, ultimately getting powered with more refined and effective capabilities.

The most commonly used RLHF techniques are:

1. Reward Modeling

In this approach, LLM is able to create several actions or outputs that the human evaluators then rate based on the quality. Over time, the model is able to predict the human-provided inputs and adjust its behavior to elevate the outputs.

Reward modeling technique offers a practical way for incorporating human judgment in the LLM learning process, enabling the model to study complex tasks whose definition is difficult with a simple function.

2. Proximal Policy Optimization (PPO)

It is an iterative fine tuning LLM process algorithm which updates a language model’s policy to maximize the reward. The base idea of PPO is to take an action that would improve the policy while making sure that the changes are not overly drastic compared to the previous policy. This balance can be easily achieved by introducing a policy update constraint that would prevent harmful large updates from getting implemented, while still permitting useful small updates to run.

This restriction is brought into force with the introduction of a surrogate objective function through a clipped probability ratio which acts as a constraint. This mode makes the algorithm a lot more efficient and stable when compared to any other reinforcement learning method.

3. Comparative Ranking

This one is fairly similar to the reward modeling technique, with the only difference being in the model learning from related rankings of different outputs provided by the human evaluators and keeping its focus on the comparison between multiple outputs.

In this technique, the LLM generates different actions and outputs for the human evaluators to rank them based on quality or correctness. The model then adjusts the behavior it would apply to create outputs which are ranked higher by human evaluators.

4. Preference Learning

Preference learning deals in training the models in a way that it learns from humans through their preferences between actions, states, or trajectories. In this model, the LLM builds multiple outputs, and human evaluators then highlight their preference between those output pairs.

The technique helps the model adjust its behavior in a way that it is able to create outputs which align seamlessly with human evaluators’ choices – something which comes handy when the model’s quantification and quality check is difficult with a numerical reward but it is easier to express preference between two or more outputs. Utilization of this model also allows LLMs to study complex tasks, making it an effective technique for LLM fine-tuning based on real-life applications.

5. Parameter Efficient Fine-Tuning (PEFT)

PEFT is used for improving pre-trained LLMs performance against specific downstream tasks while lowering the amount of easily trainable parameters. It also offers a more effective approach when it comes to updating a minor portion of the model parameters during the fine-tuning process.

The technique selectively edits only a subset of the LLM’s parameters, usually by introducing new layers or editing current ones in a task-based manner. This model significantly lowers the storage and computational requirements while assuring comparable performance in full fine-tuning.

This comprehensive list of LLM fine tuning methods are ones that are most commonly used by businesses exploring custom LLM implementations. While the use cases for each can vary, the way they are implemented in the system is fairly similar.

Here’s how.

The LLM fine-tuning process, although fairly straightforward, calls for a careful implementation of the model, especially when a brand’s digital transformation initiatives are at stake.

The LLM fine-tuning process, although fairly straightforward, calls for a careful implementation of the model, especially when a brand’s digital transformation initiatives are at stake.

In the next section, let’s look at the LLM fine tuning best practices that businesses should consider when augmenting their generative AI development services.

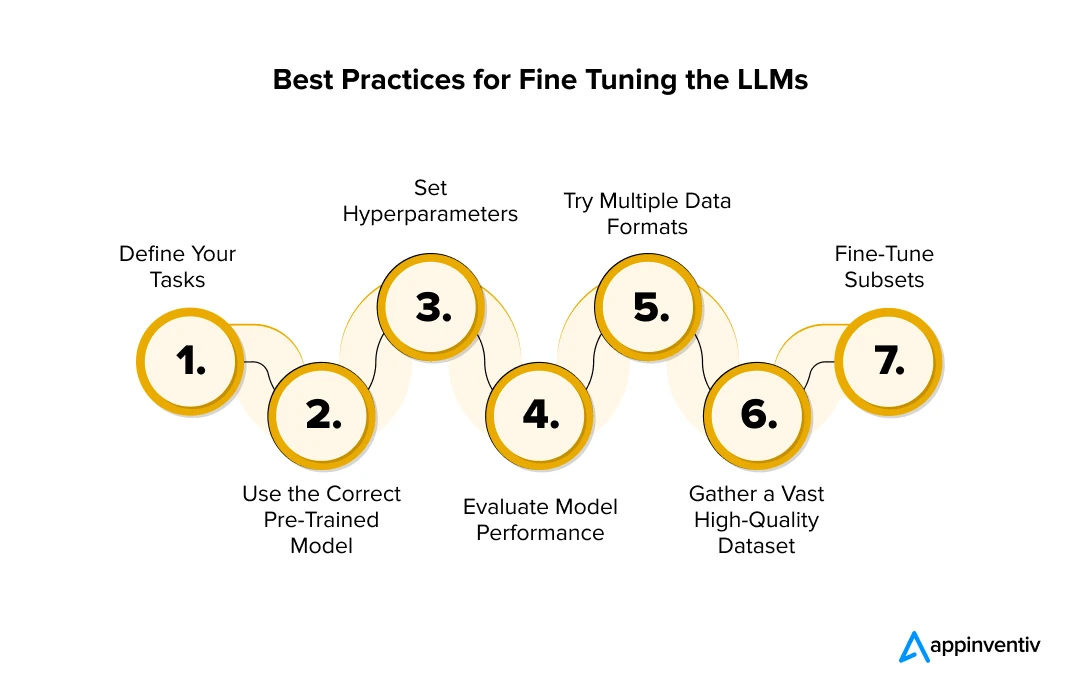

LLM Fine Tuning Best Practices

Fine-tuning large language models requires a systematic approach to ensure that the models achieve optimal performance and effectively adapt to specific tasks. The following best practices outline key steps and considerations that can enhance the fine-tuning process, from data preparation to model deployment.

By following these guidelines, you can maximize the accuracy, efficiency, and applicability of your fine-tuned LLM.

Define Your Tasks

Define Your Tasks

Defining the task you wish the LLM to perform is the foundational step of the LLM fine-tuning process. A well-defined task gives businesses directional clarity of how they want their AI application to operate and its perceived benefits. At the same time, it ensures that the model’s capabilities are aligned with the key goals and the benchmarks for performance measurement are clearly set up.

Use the Correct Pre-Trained Model

Utilizing pre-trained models to fine-tune LLMs is critical. This is how businesses use knowledge gathered from vast datasets, ensuring that the LLM doesn’t just start learning from the base up. This mode is not just computationally efficient and time-saving but also allows fine-tuning models to concentrate on the domain-specific nuances, leading to better model performance in complex tasks.

Set Hyperparameters

Hyperparameters are the adjustable variables which play an important role in the LLM training journey. For businesses, batch size, learning rate, number of epochs, and weight decay, act as the key hyperparameters that they need to modify to find the best configuration for the task.

Evaluate Model Performance

Once the process of fine tuning large language models is finished, the model’s performance should be assessed on the basis of the test set. This would offer an unbiased look into how well the model is performing on the unseen data compared to the original expectations. Based on the evaluation metrics, teams should also continuously refine the model to ensure that the improvement scale is very low.

Try Multiple Data Formats

Based on your task, multiple data formats could have varied impact on the model’s efficiency. For reference, in case you are looking to accomplish a classification task, you can use a format which would separate the prompt and its completion through a special token, like {“prompt”: “James##\n”, “completion”: ” name\n###\n”}. Here, it is important to use formats that best suit the use case you are trying to accomplish.

Gather a Vast High-Quality Dataset

LLMs are notoriously data-hungry and rightfully so. For their best performance, it is important to have a diverse and representative dataset to fine-tune on the back of. However, gathering and categorizing large datasets can be expensive and time-intensive.

To solve this, you can make use of synthetic data generation techniques and increase the variety and scale of your dataset. However, you must also make sure that the synthetic data you are using is highly relevant and is consistent with your expected tasks and domain in addition to having zero scope of noise or bias leakage.

Fine-Tune Subsets

To measure the value that you are getting on a dataset, fine-tune LLM on the subset of your current dataset and steadily increase the datasets. This would help you in the estimation of your LLMs learning curve, while giving you a clarity on whether it needs more data to become efficient and scalability friendly.

In addition to implementing the best practices, it is equally important to know the probable challenges of fine-tuning large language models that a business might face in their AI journey.

At Appinventiv, when we build an AI product, we don’t just power it up with the right LLM fine tuning models but also follow a proactive approach when it comes to identifying and solving issues.

Overcoming LLM Fine Tuning Challenges

Fine-tuning large language models (LLMs) involves several intricate challenges that can impact the effectiveness and efficiency of the process. Addressing these challenges requires strategic approaches to ensure optimal model performance and seamless deployment. Below are key challenges faced during fine-tuning and practical solutions to overcome them:

- Data Quality and Quantity: Obtaining a high-quality, well-labeled dataset can be difficult and time-consuming, which can lead to suboptimal model performance. Focus on curating diverse and comprehensive datasets relevant to the specific task and employ data augmentation techniques, such as generating synthetic data or using data transformation methods, to enhance the dataset and improve model robustness.

- Computational Resources: Fine-tuning LLMs demands substantial computational power, often leading to high costs and extended training times. Leveraging cloud-based solutions or dedicated high-performance hardware can help manage these demands efficiently. Additionally, using distributed computing and parallel processing can speed up training and reduce overall resource consumption.

- Overfitting: A common issue is overfitting, where the model performs well on training data but poorly on unseen data. Mitigate this by implementing dropout, which randomly drops neurons during training to prevent over-reliance on specific features. Early stopping can also be effective, halting training when performance on a validation set starts to degrade.

Additionally, selectively freezing certain layers allows the model to retain general knowledge while focusing on learning task-specific features in the later layers.

- Hyperparameter Tuning: Finding the right hyperparameters, such as learning rate, batch size, and the number of epochs, can be challenging due to the need for extensive experimentation. Automated hyperparameter optimization tools, such as grid search or Bayesian optimization, can streamline this process by systematically exploring different parameter settings and identifying the best configuration.

- Model Evaluation: Ensuring the model generalizes well to new data involves using a validation set to monitor key metrics such as accuracy, precision, recall, and loss throughout the fine-tuning process. Continuous evaluation allows for the identification of potential issues early on and enables adjustments to the model or training parameters as needed.

- Deployment Challenges: Deployment must be addressed to ensure the fine-tuned model performs reliably in real-world applications. Optimize the model for scalability to handle varying loads and ensure it integrates seamlessly with existing systems. Implement robust security measures to protect the model and the data it processes. Additionally, monitoring the model’s performance post-deployment and making necessary adjustments ensures it continues to perform effectively over time.

Fine Tuning LLM Models in Business – The Way Forward

The future of LLM fine-tuning lies in businesses deep diving into the intensity of their AI plans. The more the need of customization the greater should be the focus on using fine tuning models.

The answer to this can be found by thinking along the lines of a few simple questions:

- Do you have limited data?

- Are you operating on a limited budget?

- Can your needs be divided into tasks?

- Do you require domain-level expertise?

- Do you work in a data regularized sector?

If the majority of the answers is a yes, fine-tuning could be the way forward. And while we have already explored the many ways the technology would impact businesses, it is equally important to note that the journey is not straightforward. There are a number of interlinked facets to be considered. Elements that would define the success of the project.

The best move forward for a business would be to partner with a firm who has worked with fine-tuning and knows the nuances linked with the implementation process. This is where Appinventiv comes in.

We have worked on a number of projects that required customized LLMs (a choice we asked our partners to make in order to save their monetary and time intensive resources). This multi-industrial expertise has brought us to a place where we now know the nuances of fine-tuning models – process, best approach, and the steps to avoid.

Partner with us to take your innovative AI project ahead.

FAQs

Q. How to fine-tune a large language model?

A. To fine-tune a large language model, start by preparing a high-quality, task-specific dataset. Choose a pre-trained model that aligns with your needs, then configure fine-tuning parameters such as learning rate and epochs. Train the model on your dataset, validate its performance, and iterate as necessary to optimize results.

Q. How much data is needed for LLM fine-tuning?

A. The amount of data needed for fine-tuning depends on the complexity of the task and the desired accuracy. Generally, a few thousand high-quality examples are sufficient, but more data can improve performance and robustness.

Q. How to fine-tune LLM with your own data?

A. To fine-tune an LLM with your own data, first prepare your dataset by cleaning and formatting it. Choose a suitable pre-trained model, configure the fine-tuning parameters, and train the model using your data. Validate and iterate to achieve the best results.

How Much Does it Cost to Build a Custom AI-based Accounting Software?

The accounting industry has been evolving very fast, and the expanding use of AI in accounting is becoming evident. According to The State of AI in Accounting Report 2024, 71% of accounting professionals believe that artificial intelligence in accounting is substantial. Considering the given number, it is easy to grasp why companies are keen on…

Generative AI in Manufacturing: 10 Popular Use-Cases

Introduction Manufacturing is truly getting a serious makeover for the future, and it's not full of buzzwords and techno-speak; with the dawning of AI technologies, manufacturing is no longer about nuts and bolts and conveyor belts. Yes! We are talking about how Generative AI in manufacturing is transforming the entire industry. It was the next…