- What is Prompt Engineering in AI?

- Benefits and Applications of AI Prompt Engineering

- Improved Accuracy in Decision Making

- Streamlined Workflow Automation

- Innovative Research and Development

- Personalized Recommendations

- Real-Life Use Cases and Examples of Prompt Engineering

- Microsoft’s Enhanced AI Performance with Prompt Engineering

- Thomson Reuters’ Streamlined Data Extraction via Prompt Techniques

- OpenAI’s Text Generation Advancements through Prompt Engineering

- GitHub’s Enhanced Code Generation with Prompt Techniques

- Google’s Accurate Translation with Prompt Techniques

- Key Aspects of AI Prompt Engineering

- Context Provision

- Clarity and Specificity

- Task-Focused Orientation

- Iterative Fine Tuning

- Flexibility and Adaptability

- Persona and Role Specifications

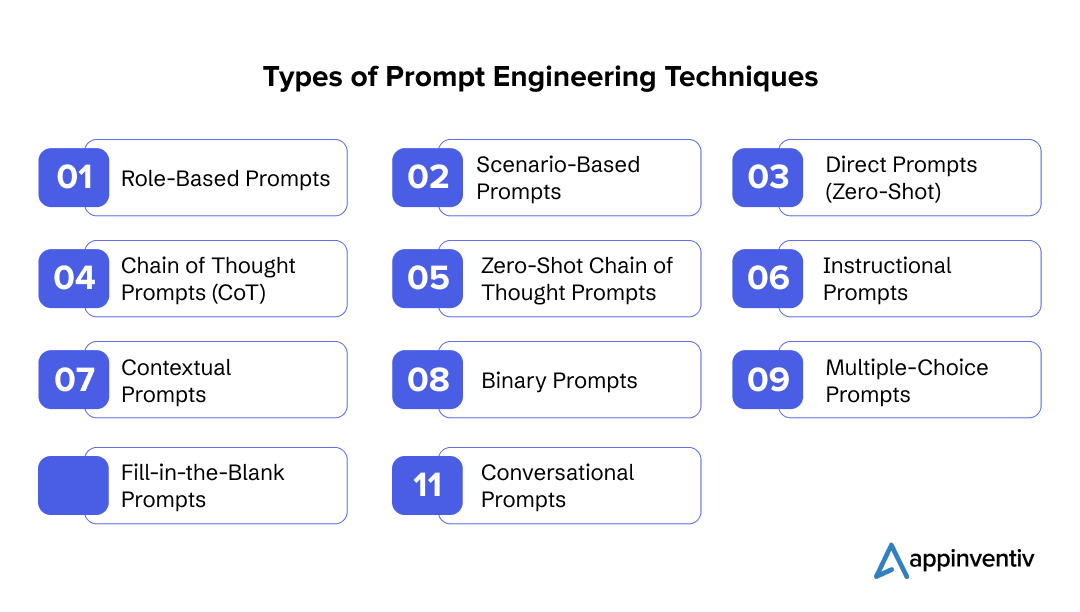

- Decoding Different Prompt Engineering Techniques

- Role-Based Prompts

- Scenario-Based Prompts

- Direct Prompts (Zero-Shot)

- Chain of Thought Prompts (CoT)

- Zero-Shot Chain of Thought (CoT) Prompts

- Instructional Prompts

- Contextual Prompts

- Binary Prompts

- Multiple-Choice Prompts

- Fill-in-the-Blank Prompts

- Conversational Prompts

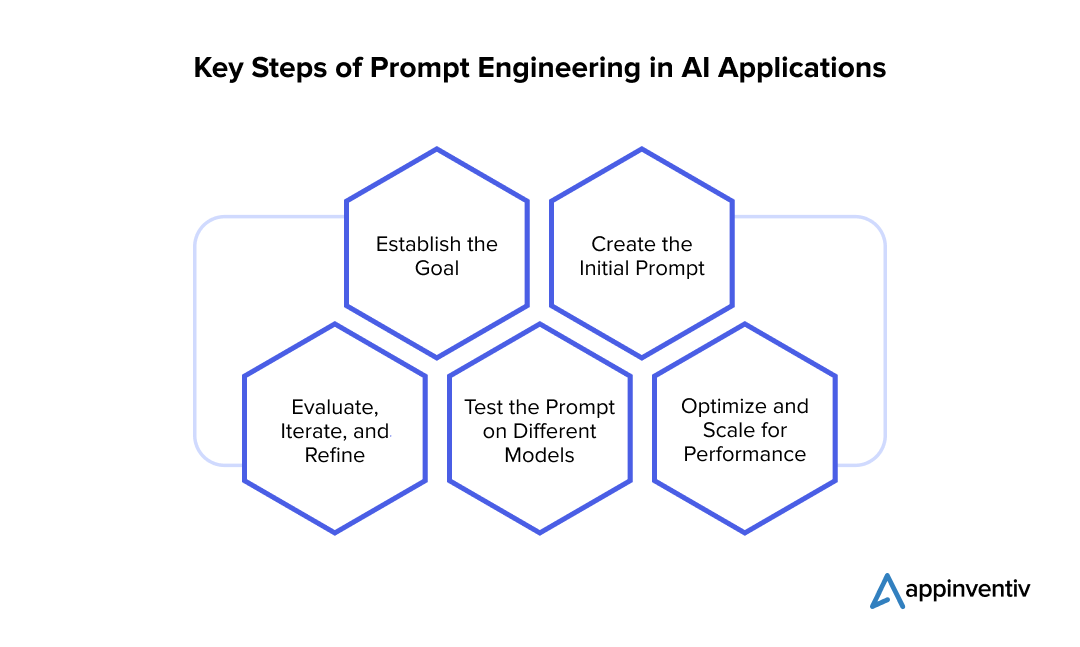

- Step-by-Step Process to AI Prompt Engineering

- Establish the Goal

- Create the Initial Prompt

- Evaluate, Iterate, and Refine

- Test the Prompt on Different Models

- Optimize and Scale for Performance

- Future of Prompt Engineering in AI Applications

- Initiate Your Prompt Engineering Journey with Appinventiv

- FAQs

Navigating the complexities of AI often involves tackling the unpredictability of model responses. This is where prompt engineering comes into the scenario. By skillfully designing and refining input prompts, we can guide generative AI models like GPT-4 to produce more accurate, relevant, and engaging outputs.

This process combines clarity, context, and adaptability, revolutionizing AI interactions. Additionally, prompt engineering helps address ethical concerns and reduce biases. Ultimately, this innovative approach unlocks AI’s full potential, making the technology more intuitive and effective for users.

Recent advancements in AI prompt engineering are evident with new tools from tech giants like Microsoft and Amazon. Microsoft’s prebuilt AI functions integrate seamlessly with low-code solutions, eliminating the need for custom prompt engineering, as demonstrated by Projectum’s enhanced project management solution.

Amazon supports prompt engineering with tools like Amazon Q Developer for real-time code suggestions, Amazon Bedrock for generative AI application development via API without infrastructure management, and Amazon SageMaker JumpStart for discovering and deploying open-source language models.

Again, according to a recent report by the CIO, Salesforce is introducing two new prompt engineering features to its Einstein 1 platform, aiming to accelerate the development of generative AI applications in the enterprise sector.

All these advancements in prompt engineering in artificial intelligence applications highlight the fact that the industry is rapidly evolving to make AI more accessible and effective. This progress is paving the way for more sophisticated and user-friendly AI solutions, ultimately driving innovation across various sectors.

In this blog, we will explore the role of prompt engineering in AI applications, its key aspects, techniques, and real-life use cases. We will also delve into how it works and its promising future. Let’s dive in.

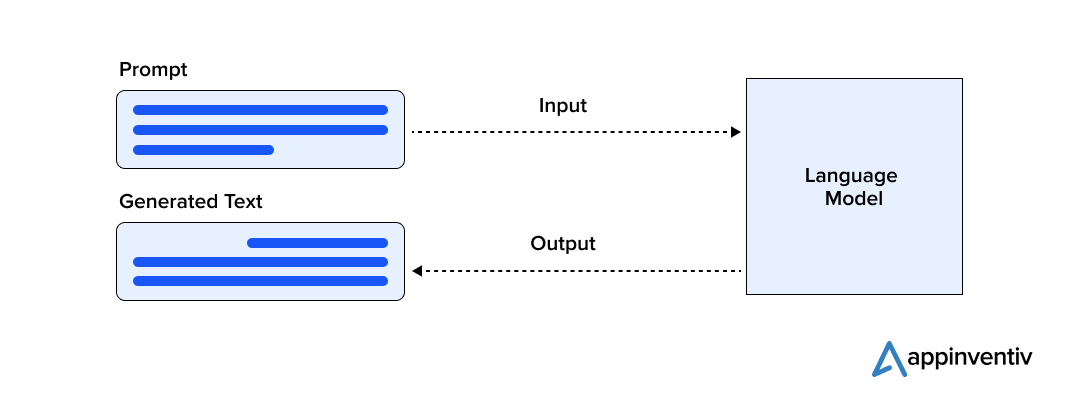

What is Prompt Engineering in AI?

The technique of carefully crafting inputs or queries to direct AI models toward generating particular, intended outcomes is known as prompt engineering. This method is particularly important for natural language processing (NLP) and other AI applications, as the formulation of the initial prompts has a significant impact on the quality and relevancy of the content created.

Prompt engineering, when done well, can greatly improve AI systems’ accuracy, performance, and usability by better aligning them with user goals and decreasing the number of irrelevant or biased responses.

Prompt engineering is important because it can enhance AI behavior, making models more efficient and reliable in their duties. By fine-tuning prompts, you can steer AI outputs to be more contextually appropriate and useful, which is essential for applications in customer service, content creation, decision-making support, and more.

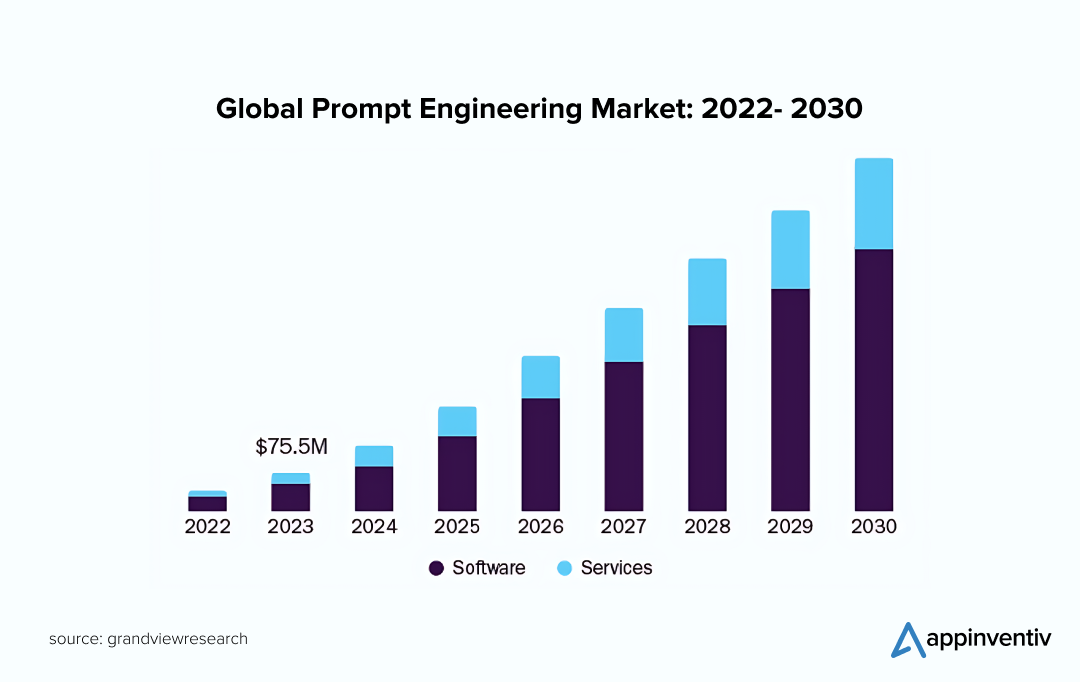

As per Statista, the global prompt engineering market is projected to reach $2.06 billion by 2030, growing at a CAGR of 32.8% from 2024 to 2030. This impressive growth is fueled by advancements in generative AI and the increasing digitalization and automation across various industries.

This trend highlights the crucial role of prompt engineering in enhancing sophisticated AI interactions within the AI ecosystem.

Benefits and Applications of AI Prompt Engineering

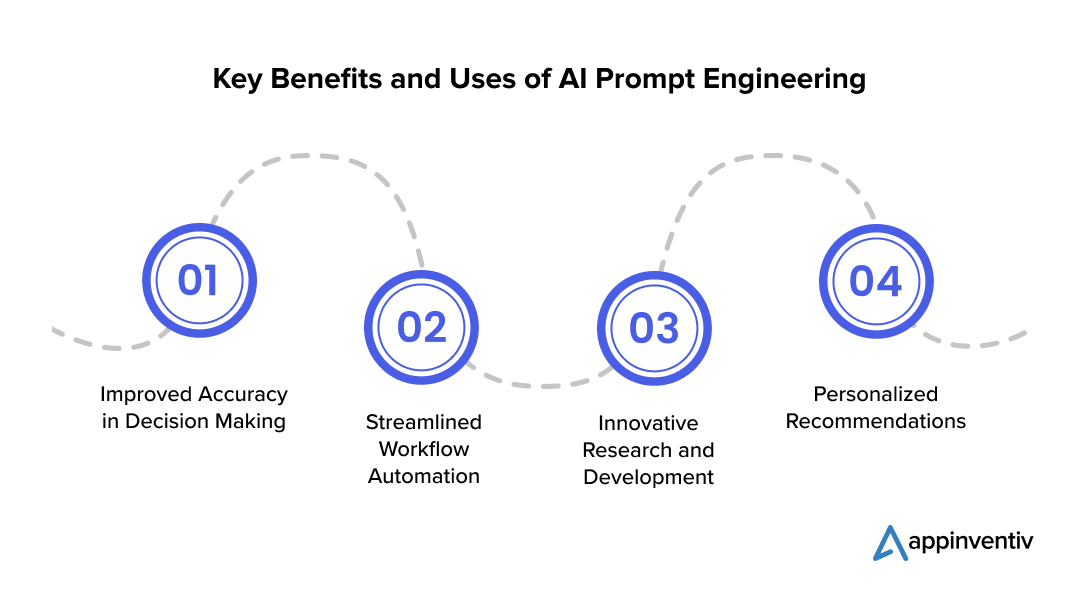

AI prompt engineering has a series of benefits that include improved efficiency, accuracy, and personalization. The technique enhances a model’s ability to handle complex tasks and provides tailored outputs across various applications, ultimately boosting overall performance and user experience. Here are some of the benefits and applications of AI prompt engineering.

Improved Accuracy in Decision Making

By designing precise prompts, AI systems can deliver more accurate and relevant outputs, which enhances decision-making and reliability in critical areas like medical diagnostics and data analysis.

Effective prompts help AI models better grasp context and other nuances, minimizing errors and improving the quality of information provided. This results in more reliable insights and recommendations, crucial for tasks that demand high levels of precision and accuracy.

Streamlined Workflow Automation

Prompt engineering enhances the automation of routine tasks in sectors such as finance and administration, making processes more efficient. By refining AI’s handling of repetitive tasks, organizations can reallocate human resources to more strategic roles that require creativity and problem-solving.

Automated functions like document processing and data entry become more accurate and faster, improving overall operational efficiency and allowing employees to focus on higher-value activities.

Innovative Research and Development

In the field of academic and scientific research, prompt engineering aids in tackling complex problems by generating insightful hypotheses and summarizing extensive data. Effective prompts guide AI models to produce valuable insights and identify trends, accelerating the research process.

This capability helps researchers synthesize information more efficiently and supports innovation by providing clearer, more actionable findings.

Personalized Recommendations

In eCommerce and entertainment industries, prompt engineering allows AI to generate highly personalized recommendations based on user preferences and behavior. Tailored prompts enable AI to offer suggestions that closely align with individual interests, improving user engagement and satisfaction.

This level of personalization not only enhances the user experience but also drives increased sales and customer loyalty by presenting users with relevant and appealing content or products.

Real-Life Use Cases and Examples of Prompt Engineering

Applications and real-life use cases of prompt engineering in AI applications span various industries. They enhance customer service interactions, optimize search queries, and personalize user experiences in software applications. Let’s look at some of these popular applications.

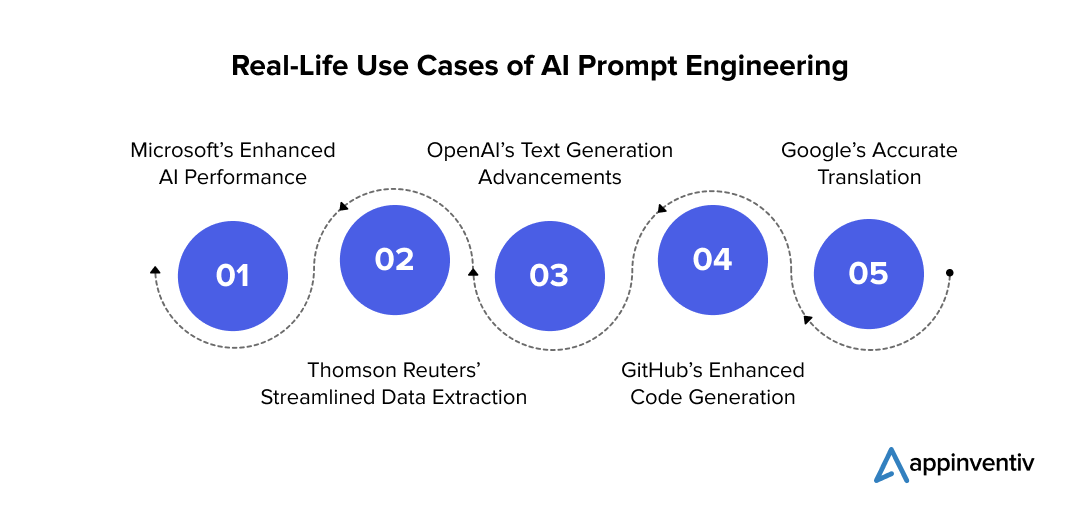

Microsoft’s Enhanced AI Performance with Prompt Engineering

Prompt engineering enhances AI systems’ ability to provide precise answers to user queries by leveraging extensive datasets, thereby improving efficiency in information retrieval.

Microsoft utilizes prompt engineering to refine AI models, optimizing their ability to generate accurate and contextually relevant responses. This involves crafting precise prompts that guide the AI’s language comprehension and reasoning capabilities. By continuously iterating and customizing prompts based on user feedback, Microsoft enhances the overall performance and usability of AI-driven applications integrated with Azure AI services.

Thomson Reuters’ Streamlined Data Extraction via Prompt Techniques

Prompt engineering plays a crucial role in extracting structured information from unstructured text and optimizing data analysis processes.

Thomson Reuters employs prompt engineering in its legal research tools to extract relevant case law and legal precedents from vast legal document databases, streamlining the research process for legal professionals.

OpenAI’s Text Generation Advancements through Prompt Engineering

Prompt engineering in AI applications facilitates the generation of marketing content, product descriptions, and creative writing, offering significant time and resource savings while maintaining high-quality outputs.

OpenAI utilizes prompt engineering in its GPT-4 model to aid companies like Copy.ai in creating compelling marketing copy and blog posts. This technology enables businesses to quickly generate various types of text content, reducing the need for manual writing and editing.

Also Read: The cost of developing a chatbot like ChatGPT

GitHub’s Enhanced Code Generation with Prompt Techniques

Prompt engineering assists developers by generating code snippets and solutions based on descriptive prompts.

GitHub employs prompt engineering in its Copilot tool, powered by OpenAI, to streamline code development by suggesting relevant code snippets and functions, enhancing coding efficiency and productivity for software developers.

Google’s Accurate Translation with Prompt Techniques

Prompt engineering enhances the accuracy and efficiency of translating text across multiple languages, ensuring clear communication globally. It enables AI to interpret and generate translations based on contextual prompts.

Google integrates prompt engineering in Google Translate, leveraging vast datasets to provide precise translations instantly. This technology supports seamless communication for millions of users worldwide, improving cross-cultural interactions and business operations.

Key Aspects of AI Prompt Engineering

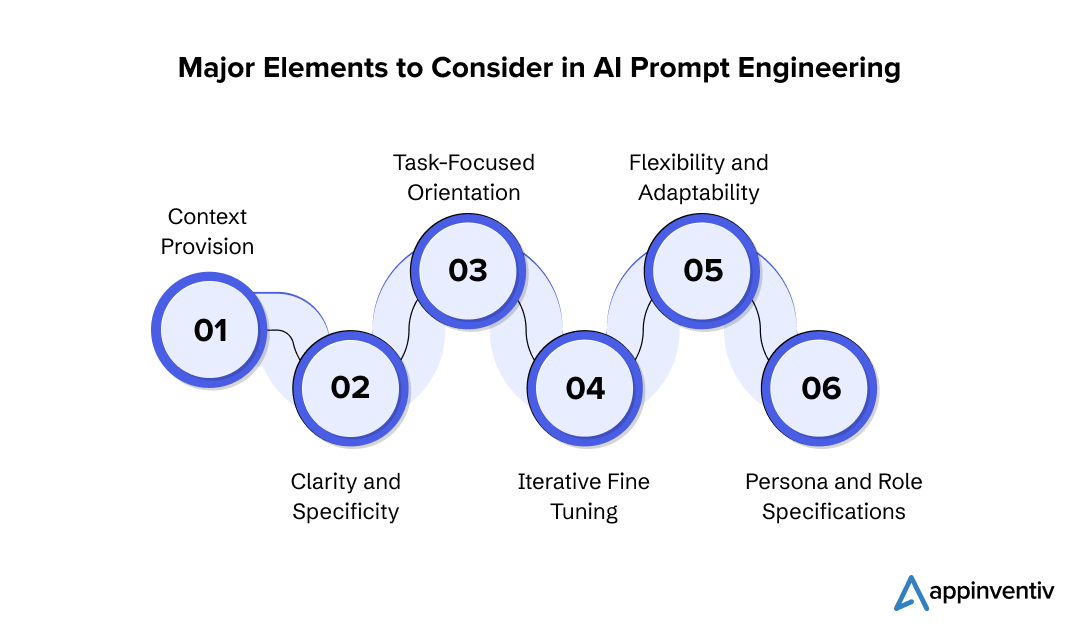

Engineering AI prompts means creating clear instructions to get the right responses, following ethical guidelines, and using advanced language tech to improve user interaction effectively and efficiently. Here are some of the key aspects or elements to consider when integrating prompt engineering in AI applications.

Context Provision

When the prompt includes sufficient context, AI can generate smarter and more appropriate responses. This can encompass examples, background information, and detailed instructions. Providing AI with a deep understanding of the situation significantly enhances the relevance and accuracy of its outputs. Contextual knowledge empowers the AI model to detect nuances and subtleties, thereby improving the overall quality of its responses.

Clarity and Specificity

Giving clear and specific prompts is one of the most crucial elements of prompt engineering in AI applications. Ensure that the prompt is explicit and unambiguous to help the AI comprehend the task and generate precise and pertinent solutions. Intimidating cues can have a wide range of unexpected results. Give clear instructions to minimize the possibility of the AI model misinterpreting them.

Additionally, clearly specified prompts can save time by reducing the need for extensive follow-up edits and revisions. In the end, this clarity results in AI interactions that are more successful and efficient.

Task-Focused Orientation

Prompts must be customized for specific tasks or goals to ensure that AI outputs closely align with user expectations. Whether the goal is generating concise summaries, accurate translations, detailed responses to complex queries, or compelling creative content, task-oriented prompt design is crucial for guiding AI effectively.

Clarifying the goal within the prompt helps businesses direct AI’s computational resources more efficiently. This approach yields targeted and precise outputs that offer tangible value across various applications, such as legal document analysis and eCommerce recommendation systems.

Iterative Fine Tuning

Enhancing AI model performance involves a continual cycle of refining and testing prompts. Through ongoing adjustments informed by user input and AI feedback, prompts can be fine-tuned for better clarity, structure, and depth. This iterative approach also addresses biases and misinterpretations, enhancing the accuracy and reliability of AI outputs over time.

By committing to iterative refinement, organizations can develop more reliable and effective AI solutions that meet diverse customer needs and expectations, spanning applications such as financial forecasting and healthcare diagnostics.

Flexibility and Adaptability

Crafting adaptable prompts tailored to different contexts enhances the versatility of AI models. This flexibility allows businesses to reuse prompts across various applications, saving both time and resources. Ensuring prompt customization enables AI systems to consistently deliver efficient performance across diverse use cases, accommodating varied input formats, languages, and specialized domains seamlessly.

This flexibility increases operational effectiveness while also broadening the range of possible uses for AI technology, such as creating bilingual content for international audiences.

Persona and Role Specifications

Defining a specific role or character for AI facilitates tailored responses that reflect desired viewpoints or tones. For instance, instructing AI to respond as an informed educator, technical expert, or supportive customer service representative can adjust both the style and content of its outputs.

Businesses designate personas to ensure consistency and appropriateness in AI interactions across diverse user interfaces and communication channels. This personalized approach enhances user satisfaction and engagement and drives advancements in specialized AI applications such as virtual assistants and conversational interfaces.

Decoding Different Prompt Engineering Techniques

Understanding different prompt engineering techniques is crucial for optimizing AI interactions, from simple command-based prompts to more advanced conversational prompts. Effective techniques enhance AI model performance and user engagement, catering to diverse application needs and contexts.

Role-Based Prompts

This prompt assigns a specific role or perspective to the model, such as “You are a teacher. Explain the importance of homework to your students.”

Scenario-Based Prompts

This prompt describes a situation or scenario and asks the model to respond or solve a problem within that context, for example, “Imagine you are the CEO of a company facing a data breach. What steps would you take?”

Direct Prompts (Zero-Shot)

This method involves providing the model with direct instruction or questions without any additional context or examples. Examples include generating marketing ideas or providing a brief on a specific topic.

Chain of Thought Prompts (CoT)

This method encourages the model to break down complex reasoning into a series of intermediate steps, leading to a more comprehensive and well-structured final output.

Zero-Shot Chain of Thought (CoT) Prompts

This method combines zero-shot prompting with chain-of-thought prompting by asking the model to perform reasoning steps, often producing better output.

Instructional Prompts

This prompt directs the model to perform a specific task, such as “Explain how photosynthesis works” or “List the major events of World War II.”

Contextual Prompts

This provides background information to help the model generate more relevant responses, such as “In the context of software development, describe the Agile methodology.”

Binary Prompts

This prompt poses a yes/no or true/false question to the model, such as “Is water essential for human life?”

Multiple-Choice Prompts

This prompt provides several options for the model to choose from, for example, “Which planet is known as the Red Planet? a) Earth, b) Mars, c) Jupiter.”

Fill-in-the-Blank Prompts

This prompt presents a sentence with missing words that the model needs to fill in, like “The capital of Italy is _____.”

Conversational Prompts

This prompt mimics a natural conversation, guiding the model to generate responses that fit into a dialogue, like “What are some effective ways to reduce stress?”

Step-by-Step Process to AI Prompt Engineering

Prompt engineering AI stands out for its iterative approach, ability to swiftly address challenges, and commitment to delivering high-quality solutions promptly, all while maintaining rigorous standards of safety and excellence. Here’s how prompt engineering in AI applications works:

Establish the Goal

Establishing the project’s goal is a vital initial step in AI prompt engineering. This entails defining the goal and reach of utilizing AI prompts, such as producing content for a certain niche or producing text for customer support conversations.

By defining a clear goal, teams can coordinate their efforts to create prompts that accomplish the desired aims. This clarity also aids in selecting the right AI model and tools for the job, guaranteeing effective resource allocation.

Create the Initial Prompt

Crafting the initial prompt is the foundational step in prompt engineering that involves meticulous designing of a set of instructions that directs the language model’s output. This process involves a thorough analysis of the problem to ensure that the prompt effectively guides the model towards generating the desired responses.

A well-crafted initial prompt is crucial for setting the stage for accurate and relevant output from the AI. Also, it establishes the parameters of how the model will respond to queries and interpret, affecting the overall usefulness of the generated results.

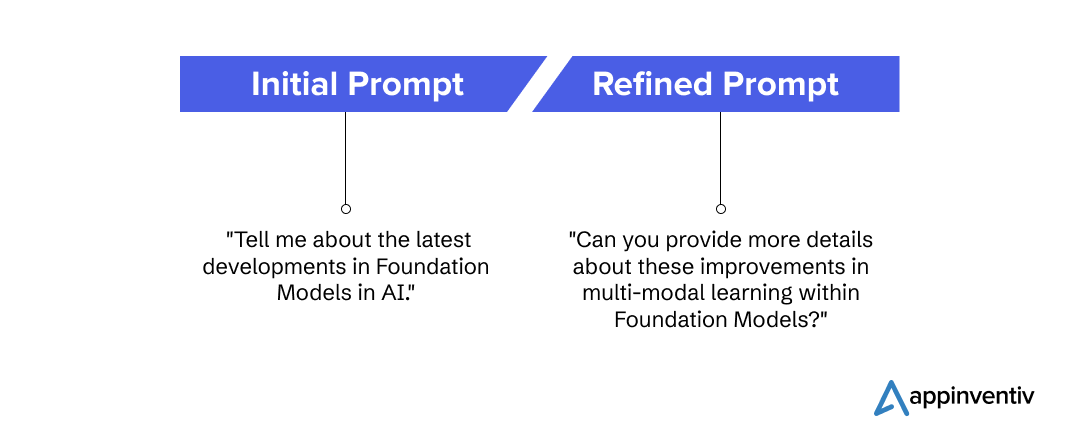

Evaluate, Iterate, and Refine

Once the initial prompt is used to generate a response from the model, evaluating this output becomes a critical phase. This step assesses how well the prompt performs in eliciting the intended information or behavior from the AI. It helps determine the prompt’s effectiveness and the model’s interpretive accuracy, providing insights into whether the prompt meets the requirements or needs adjustments.

Through careful evaluation, you can identify specific areas for improvement and refine the prompt to better align with the desired outcomes, ensuring that it effectively guides the model in future interactions. Here’s a difference between crafting an initial prompt and a refined prompt.

Test the Prompt on Different Models

It is crucial to test the prompt across different models for ensuring its effectiveness and reliability. By applying the prompts to multiple LLM’s, you can understand and observe how well it performs in different contexts. The testing phase offers a deeper understanding of the prompt’s versatility and helps identifying model-related issues.

Additionally, it allows you to evaluate the prompt’s performance and adaptability, ensuring its consistent functioning across diverse applications and environments. Testing the prompt, thus helps in refining and enhancing the prompt further.

Optimize and Scale for Performance

The final stage involves optimizing and scaling the prompt for ensuring better performance. Once the prompt has been optimized, it produces desirable results scaled for broader tasks, contexts or automation levels. This step aims to leverage the refined prompt’s effectiveness on a larger scale, enhancing its utility and impact across various applications.

Also, scaling often requires integrating the prompt into more complex systems or workflows, ensuring that it performs well in diverse scenarios and maintains its effectiveness as the scope of use expands. This broader application helps maximize the prompt’s benefits and supports widespread adoption and implementation.

Future of Prompt Engineering in AI Applications

Looking forward, the future of prompt engineering in AI applications is poised for significant growth and continuous innovation. As AI models advance in complexity and capability, prompt engineering will play a crucial role in enhancing their accuracy, relevance, and ethical integrity.

Progress in natural language processing and machine learning will drive the development of prompts that are more intuitive and contextually aware, thereby transforming user interactions across various domains. Additionally, the integration of low-code and no-code platforms will democratize prompt engineering, making it accessible to a broader range of users and accelerating its adoption across industries.

The rapid advancement of AI models necessitates agile prompt engineering strategies capable of adapting to new functionalities and complexities. The increasing emphasis on personalized AI interactions will drive innovations in prompt engineering, ensuring that prompts are tailored to meet individual user preferences and needs more effectively. Furthermore, as AI finds applications in diverse fields, specialized prompt engineering approaches will be essential to optimize performance and relevance across different domains.

Initiate Your Prompt Engineering Journey with Appinventiv

The future of prompt engineering promises to elevate AI technology to new levels of usability, efficiency, and ethical compliance, driving a future where AI systems seamlessly integrate into everyday life and enhance human experiences.

Appinventiv is a leading AI development services provider that leverages advanced artificial intelligence technologies to execute transformative projects like YouComm, Vyrb, Mudra, JobGet and others.

Our expertise in prompt engineering AI ensures that we develop solutions that are not only highly effective but also responsibly designed, meeting diverse user needs while adhering to ethical standards. We use various prompt engineering methods for our clients’ projects, some of the generally focussed ones being:

Zero-Shot Learning: Assigning the AI a task without any prior examples, using only a detailed description to guide its response.

Prompt: “Explain what a large language model is.”

One-Shot Learning: Providing a single example alongside the prompt to give the AI a clear understanding of the expected context or format.

Prompt: “A Foundation Model in AI, such as GPT-3, is mainly trained on large datasets and adaptable to perform various tasks. Explain the functions of BERT in this context.”

Few-Shot Learning: Supplying a few examples (typically 2–5) to help the AI grasp the pattern or style you’re looking for in its response.

Prompt: “Foundation Models like GPT-3 are used for natural language processing, and DALL-E for image generation. How are they applied in robotics?”

Chain-of-Thought Prompting: Instructing the AI to explain its thought process step-by-step, which is useful for tasks that require complex reasoning.

Prompt: “Describe the process of developing a Foundation Model in AI, from data collection to model training.”

Partner with us to get started with prompt engineering in AI applications. We combine innovation with reliability, setting the stage for groundbreaking advancements in AI-driven technologies.

FAQs

Q. What is the purpose of prompt engineering in AI systems?

A. Prompt engineering in AI systems aims to create and implement effective solutions swiftly using artificial intelligence. It focuses on iterative problem-solving, agility in adapting to evolving challenges and ensuring high performance and reliability standards. This approach enables rapid adaptation to technological advancements and market demands, promoting innovation and timely delivery of intelligent solutions.

Q. What are the types of prompt engineering in AI applications?

A. Prompt engineering in AI applications involves crafting and refining prompts to generate desired responses from AI models, especially large language models like GPT-4. There are various types of prompt engineering AI, each suited to specific use cases and goals:

- Task-specific Prompting

- Few-shot Prompting

- Instruction-based Prompting

- Context-based Prompting

- Persona-based Prompting

- Zero-shot Prompting

- Multi-turn Prompting

- Chain-of-thought Prompting

Q. What are some of the major benefits of prompt engineering in AI applications?

A. AI prompt engineering improves the utility and performance of AI models in a number of ways. Among the main advantages are:

Personalization and Customization: Prompts can be customized to match particular characters, situations, or styles. This allows for more engaging, customized responses that work for various audiences and use cases.

Enhanced Relevance and Accuracy: Creating exact prompts can help the AI provide more relevant and accurate responses, ensuring that the final product closely complies with user requirements and expectations.

Increased Productivity: Prompts with a clear and concise design can expedite user engagement with AI models, minimizing the need for extensive post-processing or corrections and saving users’ time.

Greater Diversity of Applications: Prompt engineering can increase AI models’ capabilities, allowing them to handle a wider range of scenarios and jobs, from technical problem-solving to creative writing.

Facilitating Difficult Tasks: Users can enhance the clarity and depth of the AI’s reasoning and output by directing it to approach hard problems in an organized way through step-by-step or chain-of-thought prompting.

Enhanced User Experience: Prompt engineering, when done right, can improve end users’ overall experience and happiness by making AI interactions more natural and easy to use.

Uniformity in the Output: Standardizing prompts can help users obtain more consistent AI responses, which is important for preserving quality and dependability in applications like content creation and customer support.

Q. How to use prompt engineering in AI applications?

A. Here are some of the best prompt engineering strategies and uses in AI applications:

- Develop precise prompts to direct AI models towards desired outcomes.

- Integrate large datasets to train AI for precise and accurate responses.

- Continuously refine prompts based on user feedback to enhance relevance and effectiveness.

- Implement prompt engineering to improve AI’s language comprehension and reasoning.

- Apply prompt engineering across diverse applications to enhance performance and user engagement.

How Much Does it Cost to Build a Custom AI-based Accounting Software?

The accounting industry has been evolving very fast, and the expanding use of AI in accounting is becoming evident. According to The State of AI in Accounting Report 2024, 71% of accounting professionals believe that artificial intelligence in accounting is substantial. Considering the given number, it is easy to grasp why companies are keen on…

Generative AI in Manufacturing: 10 Popular Use-Cases

Introduction Manufacturing is truly getting a serious makeover for the future, and it's not full of buzzwords and techno-speak; with the dawning of AI technologies, manufacturing is no longer about nuts and bolts and conveyor belts. Yes! We are talking about how Generative AI in manufacturing is transforming the entire industry. It was the next…